3 minutes

Microk8s

Running MicroK8s an Experience

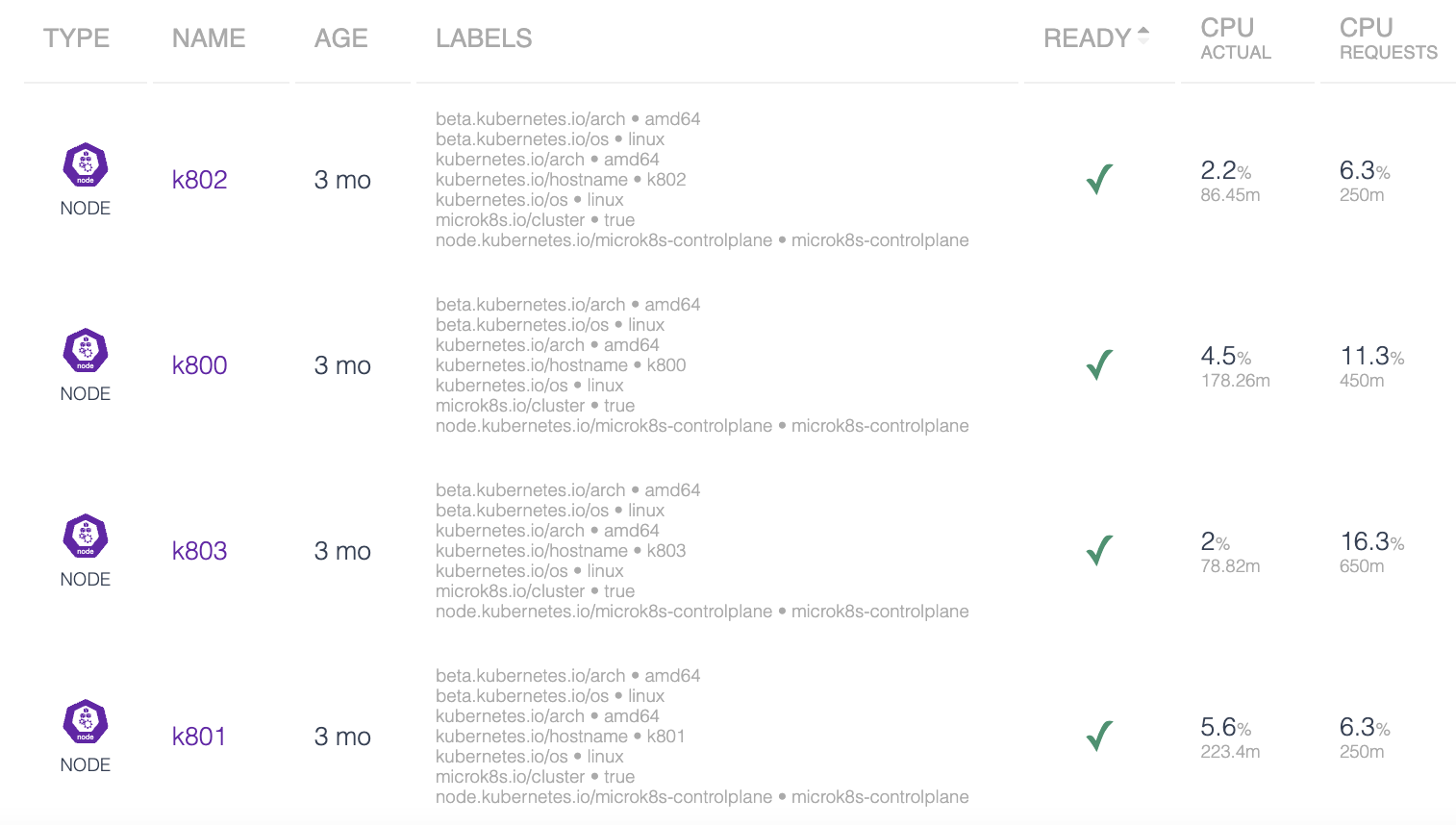

About three months ago I deployed a 4 node HA MicroK8s cluster for personal and lab related workloads. This is a post about my positive MicroK8s experience.

The Deployment Process 💪

In my lab, I’m running a three-node Proxmox cluster. It uses local storage (NVME) in a LVM/JBOD configuration on each node. The nodes and NAS communicate with each other over 10GB networking.

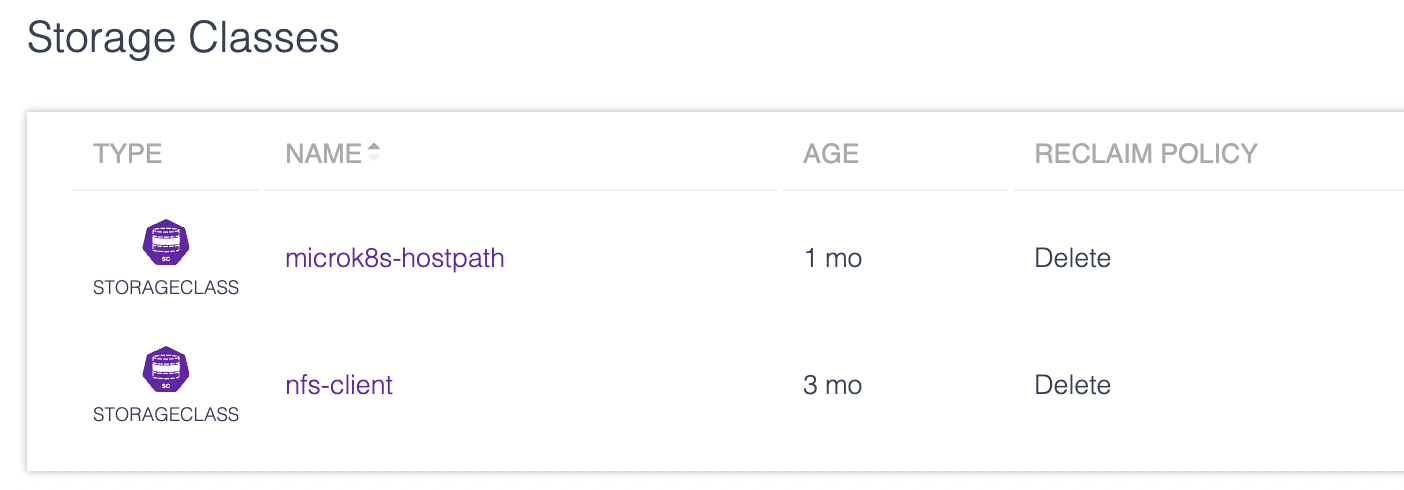

I deployed the nodes using Ubuntu Server in High Availability (HA) mode. The server builds took around 1-2 hours of busywork. They were IP’d statically in a sequential order. A storage server was created on Ubuntu as well, exporting an NFS share to share between the nodes. The storage server is backed up to a Network Attached Storage (NAS) at a regular interval.

Deploying Kubernetes using snap took less than an hour to configure the cluster. I quickly discovered that some features, such as Metallb and the metrics servers, are deployed through MicroK8s using the enable feature. Some things looked different; the Metallb configuration and some of the metrics inside looked different than my vanilla Kubernetes cluster. Additionally, the API was automatically exposed through MicroK8s, which did not match the vanilla experience.

This was an easy and straightforward deployment process. I would recommend this method to anyone wanting to get a high-availability Kubernetes cluster up and running without needing a lot of technical knowledge or having to deeply understand the internals of Kubernetes.

Running MicroK8s 🏃♂️

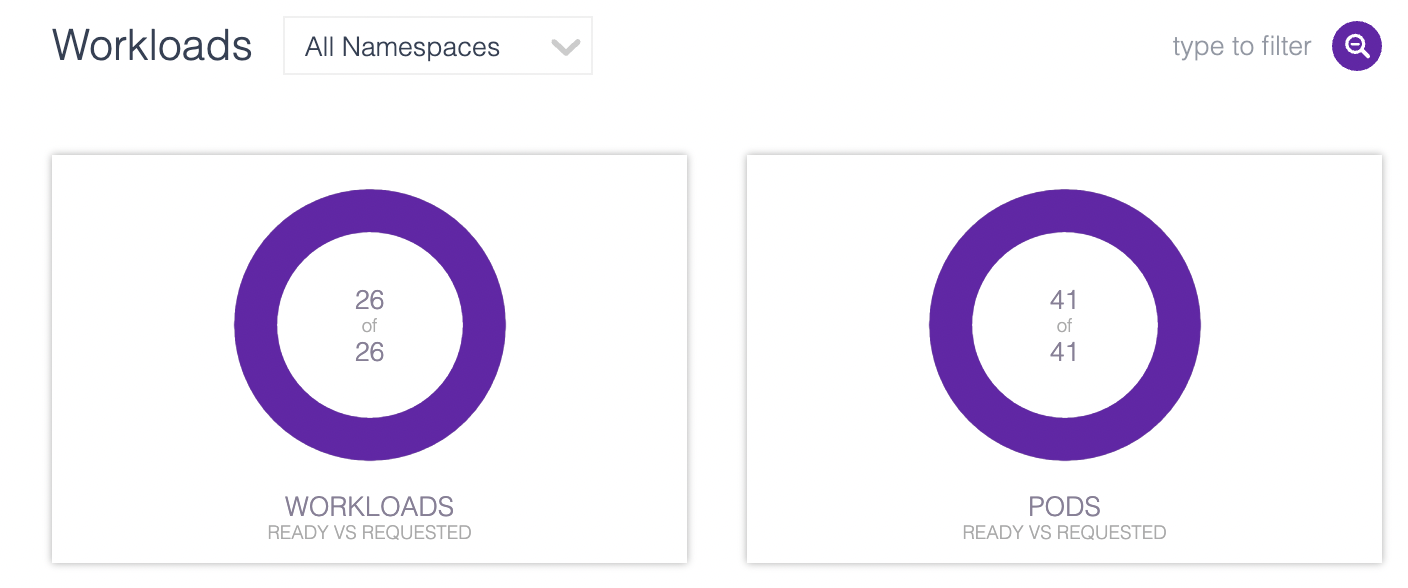

I have a library of YAML deployment files for more than 10 workloads, such as Smokeping, Grafana, OpenSpeedTest, and Guacamole. This hardware is running in my home lab, which is powered by UPS. Unfortunately, we lost power recently and the UPSs died. Thankfully, the nodes recovered without any issues.

Patching the OS and software has been straightforward. You should drain the nodes, which I did once or twice. I also wanted to do some testing, so I ran apt update and apt upgrade multiple times with reboots on live nodes. The outcome was great, with only minor interruptions to the workload.

The reliability of this cluster has been fantastic. The upgrades have been surprisingly simple. I haven’t moved to a Kubernetes release yet (I’m at 1.25), but the OS component and the MicroK8s components have been smooth and effortless to run and update.

Final Thoughts 💭

The use case for deploying, testing, and running applications in MicroK8s is very compelling. The cost of operation and running is incredibly low, as I have seen in my own environment. I am comparing it to an Alpine Cluster with 4 nodes running version 1.23 (released in ~2 years ago), which appears to have skipped the kubeadm/kubectl version 1.24 upgrade and I can’t seem to upgrade it anymore. Kubernetes control planes and clusters must be upgraded in sequence.

The built-in options and scripts for Metallb and metrics in MicroK8s might be intimidating to experienced Kubernetes administrators, but the simplicity it provides is remarkable. I would recommend MicroK8s for any on-premise deployment and for unmanaged cloud workloads. 🚀